A Computer Program Taught Itself to Dance and After 2 Days It's Better Than I Am

The machines are learning how to cut a rug.

The Lulu Art Group and deep learning software company Peltarion developed a system — chor-rnn — that can learn the style of a human choreographer and then produce its own unique dance material. Using a Microsoft Kinect camera, they captured five hours of contemporary dance motion from a professional dancer and then fed the data into an artificial neural network. The results went from laughable to laudable pretty quickly.

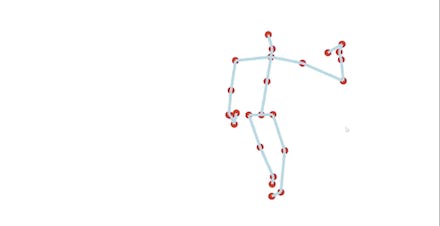

It didn't come out of the gate grooving. Ten minutes into deep recurrent neural network training and chill, chor-rnn gives you this look.

It is erratic. Peltarion noted that it is "more or less random." The system has an understanding of human joints. That alone does not make for a come-hither gyration.

But 6 hours into training, chor-rnn starts to understand how these joints are related, Peltarion wrote, which results in more human-like moves and less of an erratic scribble. It appears the stick figure has learned how to drunk roller skate at this point.

Forty-eight hours in, though, chor-rnn more fully understands joint relations as well as choreography's three basic levels of abstraction — syntax, style and basic semantics.

The stick figure starts producing numerous dance moves. It shakes its hips. It takes a stab at the moonwalk. It throws its hands in the air like it just don't care.

The Lulu Art Group and Peltarion believe this groovy virtual companion can act as a "creativity catalyst or choreographic partner" for human choreographers. They hope to test out this concept over a longer course of time, with multiple dancers and potentially with musical composition. The future of choreography is a lean, mean dancing stick figure machine.